ReviewMeta works very hard to position itself as some kind of independent arbiter of Amazon reviews, but an examination of its methods proves two things: 1) ReviewMeta is not very accurate and 2) ReviewMeta does not like being reviewed.

An article from the Washington Post a while back claimed that Amazon is undergoing a fake review crisis. There are problems with Amazon reviews, no doubt, but this article is based on some pretty data – at least in how it pertains to the world of books, which is what I know, and what I’ll focus on here. I can’t speak to the world of diet supplements or fake tan or giant tubs of lube… alas.

Is ReviewMeta Reliable?

The article’s claims are largely based on a flaky site called ReviewMeta, which seems far better at getting publicity for itself than correctly analyzing the trustworthiness of Amazon reviews, which is a pity as it would be a wonderful tool if it were in any way accurate.

As soon as I heard about ReviewMeta, I immediately wanted to test it. Amazon tends to be plagued with all sorts of scams – which I have written extensively about over the last ten years – and I could instantly see the value in a tool which could identify fake reviews or suspicious products.

Naturally, I started with my own books, as I can be pretty sure they have no fake reviews. As well as the author, I handle all the publishing and marketing personally, and I’m fastidious about the rules both for ethical reasons and commercial ones; my name is literally my brand.

However, ReviewMeta seems to call into question a large number of my reviews and reviewers. And by extension me, I guess. And many of my fellow authors too, it seems, because a large portion of the random selection of books I checked had similar issues. What was going on here?

How ReviewMeta Works

The ReviewMeta site helpfully gives explanations for why its system made these determinations, and you can actually break down each component and get a further explanation. This transparency is hugely commendable.

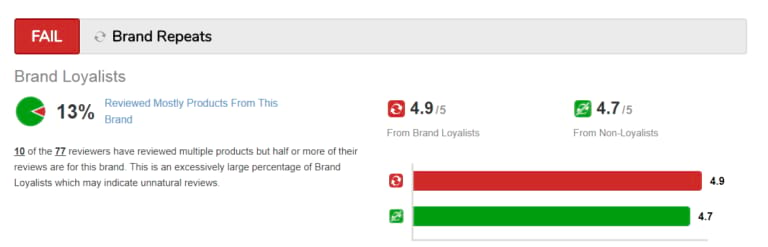

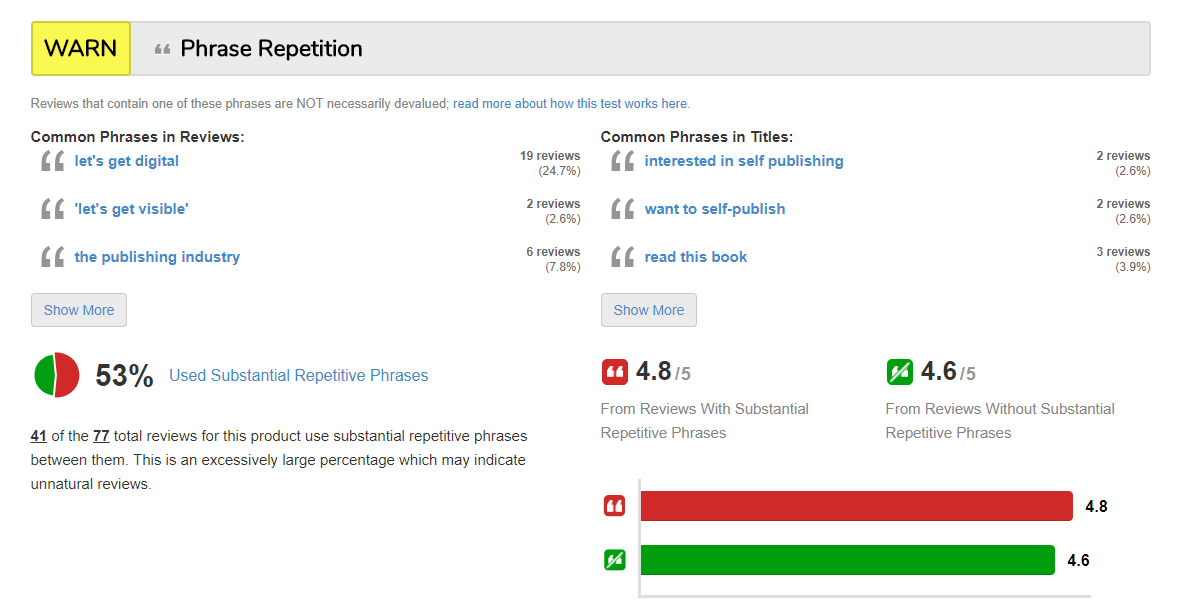

Digging into this data, though, shows the extreme limitations of the site and the way it calculates the trustworthiness of reviews – at least how it pertains to the world of books.

Perhaps it is more accurate for jellybeans or computer peripherals, I really can’t say. But when it comes to books, it makes a number of pejorative assumptions about what is legitimate reader behavior, such as reviewing Book 2 of a series after reviewing Book 1 or mentioning the title of the book in the review, and these routine reader actions cause ReviewMeta to flag these reviews as questionable or suspicious.

This then casts aspersions on the integrity of the authors of these books – who are self-employed people working in an industry where reputation and integrity are critically important, not huge faceless brands… if that matters. Worse still, the site has been aware of these issues for two years, and not only have they not corrected them, they reacted in a hostile way when presented with this information.

Let’s take a look at some concrete examples. Once again, I’m happy to be the guinea pig here, and have all of you (and ReviewMeta) poke and prod my reviews and check the authenticity of same, because I have 100% confidence that they are all genuine.

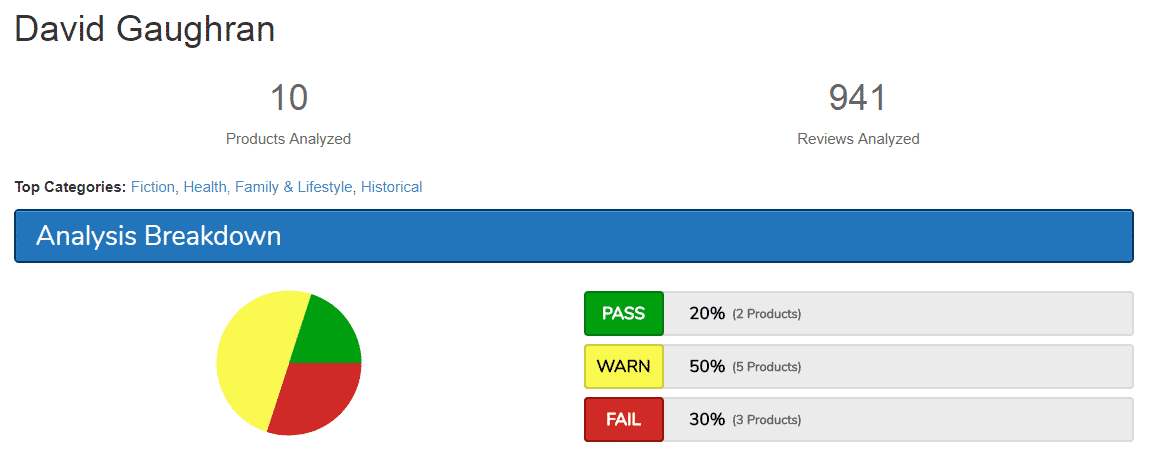

ReviewMeta Case Study: David Gaughran

Here is ReviewMeta’s take on “David Gaughran” the brand and how trustworthy it is (that’s me, btw).

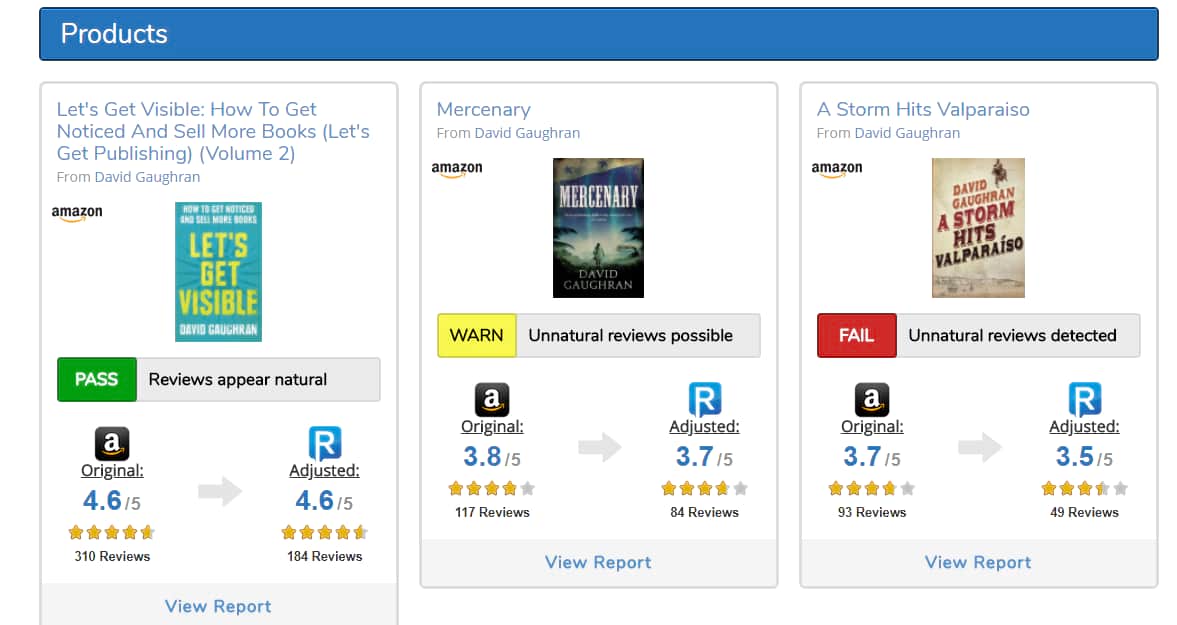

Okay, this doesn’t look good. And if you look down the page it shows each of the products they have assessed that led to this overall brand trustworthiness score. You can see many of my books have “failed” in the eyes of ReviewMeta and “Unnatural reviews detected” has been appended to several of my books.

Crikey.

You can click on each product and see how it came to that determination, and the supposed evidence for each component of that decision. Again, I stress, this transparency is truly commendable.

But this breakdown also reveals the faulty assumptions that led to these incorrect determinations about my reviews. And it’s not just my reviews, of course. These simplistic calculations affect most authors – feel free to test it out yourself.

Of course a reviewer of Book 1 and 2 is likely to review the third book in a trilogy. If you don’t take account for that wholly natural behavior when analyzing book reviews, then all your results will be skewed. Mentioning the title of the book is another pretty common thing that (genuine) book reviewers do, but ReviewMeta views with extreme suspicion.

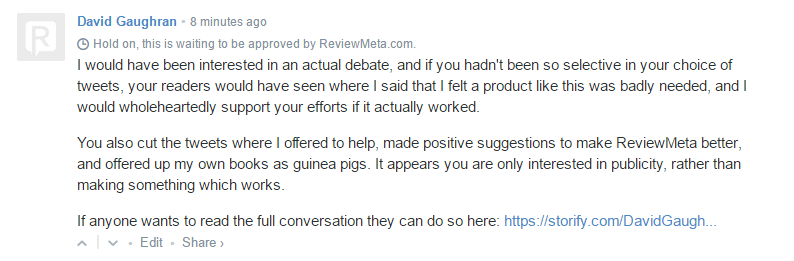

I pointed all this out in a series of tweets to ReviewMeta back in 2016, and they responded with a pissy blog post, which ignored the constructive suggestions I made to improve ReviewMeta and properly take account of the way that reviewers review books – both to miss all the false positives they seem to be generating, and also take account of suspicious patterns in book reviews they were missing.

An Overarching Focus on PR

But none of these issues have been addressed by ReviewMeta in the last two years. Instead they seem exclusively focused on publicity. Here is founder Tommy Noonan talking to Techspot in November 2016, then CNET a few months later in February 2017, and there have been similar pieces over the last couple of years which I couldn’t be bothered linking to in Forbes, NYMag, Scientific American, Quartz, PBS NewsHour, BuzzFeed, ZDNet, Business Insider, and many, many more.

In all that time of furious self-promotion, I haven’t seen ReviewMeta improve the accuracy of its site.

The sad thing about all of this is that Amazon does have a fake review problem, one which is compounded by Amazon deploying a fake review detection algorithm that seems about as accurate as the one from ReviewMeta, perhaps for similar reasons too. Which means that authors innocent of any wrongdoing get genuine, organic reviews from bona fide reviewers removed every day and the scammers and cheaters with fake reviews keep getting away with it. Sites like ReviewMeta aren’t helping with this problem, they are making it worse.

But the worst part of all, perhaps, is the complete misunderstanding of how Amazon algorithms work. Reviews don’t cause sales, they are a symptom of sales.

Yes, a lot of overwhelmingly positive reviews will sway an on the fence-purchaser, but they don’t automatically lead to sales – not in the world of books, at least. Maybe if I’m looking for a phone charger and they are all more-or-less fungible, reviews might become the tie-breaker for a lot of people. Not with novels. I don’t care how many reviews the Da Vinci Code has, I’m never going to read it.

The continued media focus on “fake” reviews – driven in part by ReviewMeta’s relentless publicity drive – is taking attention away from much more serious issues that the media have not covered in any depth, such as clickfarming, bookstuffing, incentivized purchasing, and mass gifting.

That’s not to say that ReviewMeta couldn’t serve a useful purpose, or something like ReviewMeta. Unfortunately, ReviewMeta itself don’t seem interested in addressing the flawed assumptions underlying its product, and trying to make it more accurate.

Which is such a shame.

I tried to engage with them once more. I left a comment under that prickly blog post responding to my series of tweets on ReviewMeta.

In the most ironic twist since it rained on Alanis Morissette’s wedding day, that comment was deleted. It seems ReviewMeta doesn’t like being reviewed.

Guess Who Doesn’t Like Being Reviewed?

Okay, so I was wrong. Here’s something even more ironic: a user of ReviewMeta on the consumer side left a lengthy review of ReviewMeta on Trustpilot – a genuinely constructive review which sought to identify genuine shortcomings in ReviewMeta, based on their own experience.

And here’s the crazy part: ReviewMeta’s owner was so annoyed – once again – at being subjected to any kind of critical scrutiny that he contacted the reviewer and asked them to change or delete their review.

“This review is hurtful to our business… please consider deleting or changing your review.”

ReviewMeta

Even a fiction author couldn’t make it up.

Very little discussion (none?) here of one of the main problems which is beyond reviewmeta’s control: terrible API performance and even design from Amazon.

Some folks here are speaking as if the metrics are entirely reviewmeta’s fault. Not so.

This is problem with a LOT of APIs. Googles’ various APIs can also create some ludicrous outcomes.

Obviously one is responsible for what one passes along from a source, but from personal experience it’s also true that “trust but verify” is hard. We rely on API quality from vendors. We all suffer when it’s bad.

The lesson? Don’t blame the messenger without considering the carelessness of the big players as well. There’s enough legit outrage to be passed along beyond its most obvious entryway to our experience.

Thanks for taking the time to write this. I’ve mainly been using ReviewMeta for non-book product categories but still very helpful to be more aware of some of the potential limitations. Do you have any recommendations for other Amazon review authenticity checkers?

If you scroll through the comments you will see that alternatives, like Fakespot, suffer from similar limitations. And just to underline how unreliable all such sites are, Fakespot and Reviewmeta identify different reviews on my books as problematic – almost completely different!

Yeah, I read their response to your tweets and the only comment on their page was someone calling you a “serial tweeter” who just wanted publicity for yourself and made them look even better. I am suspicious of that comment being legitimate.

I just checked a few of my books. I received a “Pass” on all of them, and in one case, my adjusted average score went up. However, there were some weird things flagged. The only failing grade was from “Never Verified Reviewers.” Out of 219 reviews on one book, 10 were never verified. Interestingly, those 10 gave reviews that averaged almost a full star below the rest of the reviews.

I did have several warnings, however. The repetitious phrases category was one, where “military science fiction” (9 times), “the main character” (14 times), “four stars” (14 times), and “semper fi” (6 times) were flagged. Oh, and “waste of time and money” was flagged for being used twice.

It was somewhat interesting to read the results, but this isn’t anything I’m going to give much credence.

Just because a review is not from a verified buyer doesn’t mean it’s fake. Books borrowed and read using the Kindle Unlimited subscription program are not tagged as “verified”. In some genres, as many as 70-80% of the books reviewed may come from KU readers.

Hello,

Although I am not an author myself, I was the first person, that wrote a review about Reviewmeta.com on the website Trustpilot. This review is a 1-star review, in which I have written my honest opinion and own findings about Reviewmeta and the fact that this website is not very reliable. For example, even if non-verified buyers of products have written a review, Reviewmeta says that there are 0% of fake reviews, just because there is also a certain number of reviews of verified customers. Yet, if there is just one fake review, the number could never go down to 0%, due to the fake reviews. Therefore, theyr algorythm is far away from the truth and that is what I wrote, including many other facts, which I have found by using their website, as I once believed that it is reliable.

Yet, shortly after I have published my review on Trustpilot about Reviewmeta, they even contacted me and asked me to change or even delete my review, because it hurts their business??? At first, Reviewmeta is for free, so there is no “business” they could make. Second, nobody asked them to do anything of this. And third, Reviewmeta themselves knows that Amazon is known for sometimes deleting honest reviews from disappointed customers about certain products, especially in the cheap department, and Amazon is often faced with many critique because of that. Yet, Reviewmeta asks me to delete my review on Trustpilot about them, just because it is an honest, but 1-star review? They were just asking me to do the same thing that they would always criticize Amazon for! Obviously, I did not deleted or changed my review, yet, I wrote an update under my original review, as an answer to Reviewmeta’s comment. Thanks to Trustpilot, my review is still online and nothing has been changed. Funny, they even stated to me that the Amazon marking “verified customer” is not an absolute proof that the review is an honest one. Yet, most websites in the net are giving you the number 1 tip, to always search for the reviews of verified customers and I can only agree about it, according to my own experience with Amazon reviews. Believe me about it, as I wrote 36 reviews by today, but only about products that I have bought on Amazon and which are therefore all verified customer reviews for good.

I can therefore only agree, that Reviewmeta, it’s rating system of Amazon reviews and the fact of how childish and angry they behave, if they are the ones that are being criticised, are proofs that this website is NOT reliable at all! My thanks to David Gaughran, for being one of the few people out there, writing the truth about Reviewmeta, as there are not many sources in the internet like this!

Spot on Christian. I’ve been going over the ReviewMeta site for a week or so and found some egregious discrepancies in various categories. The way they weight certain sub-categories makes no sense at all. Their reply to your negative review was unprofessional and condescending. I’m going to review them on TrustPilot and probably give them 2 stars, maybe 3 if I find more compelling reasons. It’s not all bad, just a large part of it. They’re also likely getting paid by at least some sellers which would cause questions to arise.

what makes problem complicated is that

Amazon is not being transparent to both Brand Side and Consumer Side

They are using amazon vine program to encourage fake reviews exclusive to company

thats why we get so many fake reviews

we should protest to goverment !!

I’ve read your article and believe you missed a big part about it. I’m working a lot with data and here is what I can say.

The adjusted rating is very similar to your original rating. Of course it’s difficult to say which reviews are real or fake but if an article has has about 50 reviews you can determin at least to some degree if it’s real. The more reviews it has, the more reliable the outcome it is.

You data tells us a lot. If you never bought reviews on your product, we have some very valuable data here and I’m thankfull for that. For example, you can say that if a product has more than 50% fake reviews there is a big chance that reviews are fake. Also, your adjusted ranking is never more than 0.2 points away from the original.

We can say that if an atricle has > 50% fake reviews and > 0.2 adjusted difference there is a big chance of fake reviews and we should be suspecius.

Something has to be done about this slanderous site. I encourage every author to file a google complaint about their reputation being tarnished by this cretin.

https://support.google.com/legal/answer/3110420

One of my wife’s reviews was flagged for having the following phrase in more than one review: “I would recommend this book”. If that is suspicious, then so are puppies and rainbows.

They also placed a warning that over 10% of the reviews mentioned that they were incentivized. Someone should tell them that Amazon allows authors to give free review copies of books, even though they have made it against the rules to incentivize reviews for other kinds of products.

How nice it would be if somebody who actually knew what they were doing could come up with an effective algorithm. I think that you nailed something very important – that book reviews are a completely different animal than reviews of other products. And maybe that is the Zon’s problem too, they are applying the same data set to book reviews as they are to spoons and tablecloths or various other widgets. Of course you would get repeat reviews by the same readers if you are writing a series – that’s the whole idea of series, to offer a reader more than one chance to be with your characters. Once again, thanks for all you do.

I’m with you, David! I was astounded at how my books failed. Although this one book had 4-5 star reviews, they gave 100% trustworthiness to the one review that hated my book and gave a 2 star. If people look at these ratings on my books, I’ll never sell another copy.

David–Thanks so much for this enlightening information. I wrote a piece on Sunday about the 1000s–maybe 100s of 1000s–of Amazon users whose accounts have been deleted in the last month because they broke some rule nobody will tell them about. Now I think I see what’s happening. If somebody mentions the name of the book, reviews a series, or commits one of ReviewMeta’s “infractions”, they’re flagged as “bad actors” and Amazon deletes their accounts. No explanation, No refunds. Their stories are heartbreaking. I’ve updated my post with this information and a link to this post. http://annerallen.com/2018/04/amazon-paid-reviews/

I find it interesting that they flagged my reviews for “overrepresented word groups” which means, as near as I can determine, that the reviews for my books tend to longer than reviews for a typical product. Well, I don’t write typical books, and a lot of my reviewers spent a lot of words discussing them. They seem to think that’s a bad thing.

I am very sceptical of reviews for anything after 15 years of internet purchases. One person’s view of a riveting, ‘best-seller’, has previously turned into a wasted hour or so of my precious reading time and yet another paperback to the charity store – which I think they don’t need. I do find the sample chapters sometimes useful so you can get a sort of a feel for a style. No doubt R####w M#t# will have it’s brief moment in the sun and sink without trace soon enough. And no, I am not a robot.

Thanks again for such an entertaining and informative blog David. I always learn something! And it’s nice to see so many people are so supportive of each other.

Roger

I meant to work in a mention of JA Sutherland’s post from 2016 on ReviewMeta. You’ll see his experience was similar to mine – the system incorrectly identified questionable reviews on his books, and when he attempted to point that out in the comments at ReviewMeta… they deleted his comments: http://www.jasutherlandbooks.com/2016/09/27/no-reviewmetas-not-ready-for-prime-time-at-least-for-books/

Ugh. Once again, far too much trust is placed in a new technology before it’s really been shown to work. Or before it matures to the point where is actually can be trusted. 🙁

Back in early 2016 I found a company with a similar service called Fakespot. When i first tested it I kept seeing obviously bogus scores for book reviews.

https://the-digital-reader.com/2016/01/25/spot-fake-kindle-reviews-with-fakespot/

Fakespot had to go rework their algorithms to take into account quirks of the book community social graph.

https://the-digital-reader.com/2016/01/31/fakespot-responds-to-complaints-over-book-review-ratings-promises-changes/

Or at least that is what i thought at the time. I just checked Fakespot today and they agreed with Review Meta that you are a sketchy character. Funny thing is, the two companies can’t agree on which books have fake reviews:

https://www.fakespot.com/company/david-gaughran

lolcry

Considering they can’t even agree how many books I have (they’re both wrong!), I’m going to go out on a limb here and suggest they are equally unreliable…

All the more reason for authors to organize and leverage a united clout. Though I hear you, David, about the importance of valid reviews, I’ve been saying what Kaytee has said for years. “Reviews” is broken. (Although they’re actually mostly “reader comments,” not reviews, technically. Still relevant, of course, if “real.”) The folks who would write intelligent and insightful reviews–other authors–often get their fingers smacked for doing so.

So … hmmmm … is it not of peculiar interest that Jeff owns The Washington Post? What’s afoot?

The Washington Post has published articles that have been both highly critical of Amazon, and also some very positive coverage too. Doesn’t appear to be any major discernible slant to coverage that I’ve seen.

What about the reviews that look like they’re part of an RP forum? I’ve seen a ton of them on BN.com and wouldn’t be surprised if they are on Amazon too. Are these flagged as fake reviews?

Excuse my ignorance, but what’s an RP forum? Do you mean those weird reviews on B&N which are part of some bizarrely meta roleplay?

Yes, that is exactly what I mean.

Are these the same folks as Fakespot? I had them assess my books and they not only declared I had many fake reviews (I don’t as I am author/publisher/marketer of all my books) but then they tweeted that fact to the entire world using my book title as hashtag. I took it up with them, as did a lot of indie authors, and they reworked their algorithm when they finally realized that reviewing books is a bit different…

Different guys, and I haven’t looked into Fakespot at all, but not surprised to hear that they might have had similar issues.

A while back Fakespot gave my two major works decent ratings. I just ran the ratings again, and they gave a C and a D. They’ve played with their algorithms, too, and I’d now classify them as Not Reliable.

To be quite honest, given that most of the reviews are from verified purchases, I’m a little pissed.

Ah well. If that’s the worst thing that happens to me this week, it’ll be a good week.

I’m very glad to have this info. How bizarre is this? And for them not to want feedback is not very bright.

It makes sense if all they want is publicity, and not to actually build a genuine tool which works (and doesn’t essentially accuse honest people of cheating).

OMG! Giant tubs of lube??! I laughed so hard…

Sincerely, Dean Kutzler Philadelphia’s Thriller Author https://www.deankutzler.com EMAIL: Deankut@gmail.com

Dean Kutzler, is a #1 bestselling author that writes fast-paced thrillers entrenched with surprisingly true facts hidden around the world.

>

Truly, the best thing Amazon could do is get rid of the reviewing altogether. There is so much fraud, and literally, even industries built up around devising ways to pull that off. Many of the folks who do so well on Amazon are the ones who are willing to pay said companies for the reviews.

Honestly, the worst fraud doesn’t involve reviews. With books at least, I think the problem is overstated to an extent (and more serious problems ignored). There are issues with reviews – absolutely no argument that there are unscrupulous sellers (and buyers) of fake reviews. And there are authors/publishers who constantly break the rules in terms of inducements and so on. And Amazon has botched the whole issue. But I’d prefer to fix it than right off something which helps readers find books they like and helps authors find their target readers.

Thank you so much for tackling this bizarre organization (but we live in an increasingly crazy world) and doing so as elegantly and truthfully as is allowed.

One question, inspired by ‘follow the money’ – how does ReviewMeta attract its revenue? Who pays it for flawed data and why?

Thank you.

That’s where things get interesting, as they always do. The guy behind it runs another site which *drum roll* provides reviews on stuff like supplements. Plus he has monetized his sites with ads and affiliate relationships. So he seems to have an interest in overstating the problem. An incentivized meta-reviewer, if you will.

Well, who’d’a thunk, eh?

David, I think… Never mind, I mentioned your name, I must be some sort of bot or unnatural commenter.

When the Amazon site requires a $50 purchase to be entitles to review every product whether purchased or used and goodreads their other site has a competition to see who can post the most fake reviews, then both their APIs are frauds. There’s also a pesky FTC regulation that makes it a crime to give false testimonials for products never bought or used that Amazon seem to think doesn’t apply to them. I have been saying since 2013 that the reviews on Amazon is worthless.

From a customer’s perspective, reviews are vital, and detecting fake ones is just as vital. We want good value for our money, not a ripoff, even if it’s cheap. I use fakespot myself, but I could wish for a simple mechanism such as “eliminate reviews that aren’t verified purchasers” rather than relying on a third party’s secret assumptions about how to detect fake reviews.

Wow, just checked out one of my own books – they failed it on something they call ‘reviewer ease’, here is what it says: ‘The ease score is the average rating for all reviews that a given reviewer submits. The average ease score for reviewers of this product is 4.5, while the average ease score for reviewers in this category is 4.3. Based on our statistical modeling, the discrepancy in average rating between these two groups is significant enough to believe that the difference is not due to random chance, and may indicate that there are unnatural reviews.’

On what planet does a difference between 4.5 and 4.3 count as statistically significant?

Right! And even leaving that aside, what does this tell us anyway? That your books are slightly better than average? How suspicious!

That is so bizarre…

Isn’t it just!

I feel your pain as a reviwer. I have had a number of my very legitimate reviews

taken down for sexual content. Now I am marriage, relationship and sexual coacah

and I was reviewing books with large sexual content and they claimed that I violated

their standards (which I did not, I have over 800 reviews published)